August 8th, 2022 Cleaning up a multi-region mess By Jeffrey M. Barber

I’ve started to make a mess of things. Fortunately, it is recoverable, so come along as I untangle my multi-region mess. If I haven’t mentioned it here, then the internal plan is to have Adama run on the edge close to people. Ideally, I want a heterogeneous fleet of clusters spread across the globe running on different vendors. I’m evaluating using PlanetScale for the control plane, and it’s looking like the control plane will be located in the US where I may shard at a tenant level. I’m still thinking about the database, but the open question at hand is how to build the multi-region data plane. As a design detail, documents are stored with Amazon S3 and then rehydrated into one region with periodic snapshots, so keep that in mind. In particular, documents will live on a single host which I’ll write about more at a later time.

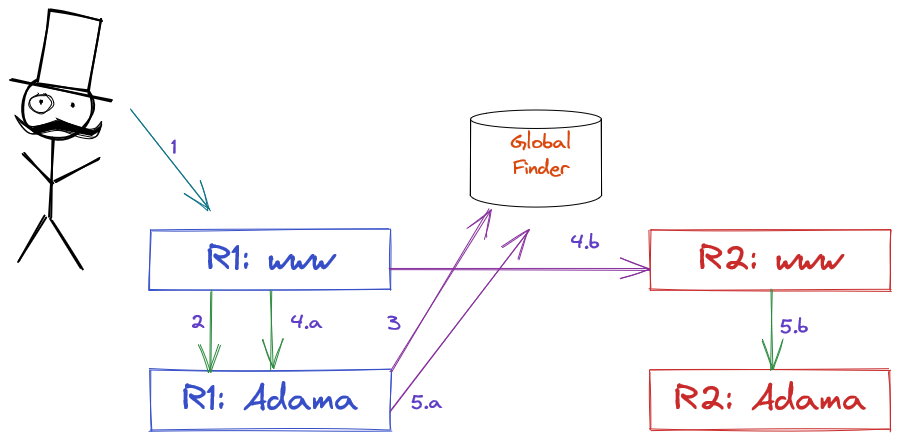

So, let’s untangle this mess:

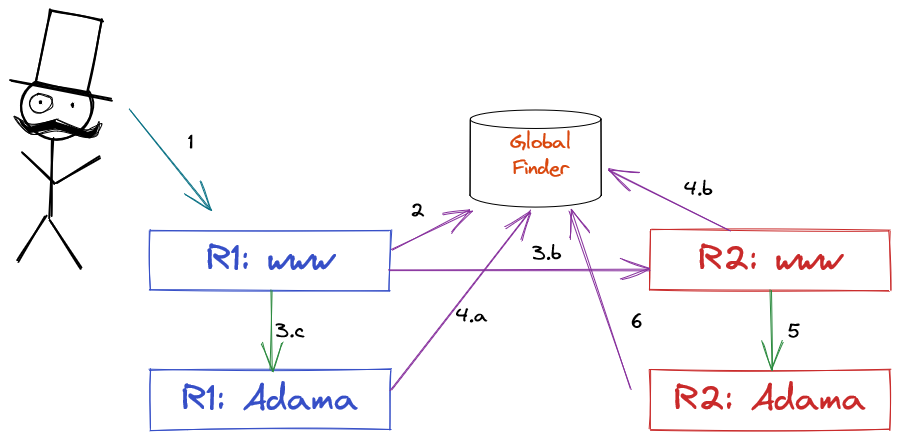

This is where things were heading as I was slinging code around willy-nilly, and then I realized as I was placing the final keystone for making it work… It’s a giant mess and slow with many cross-region calls. Let’s dig in sort out a few scenarios.

Scenario: Document is in cold storage

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host looks up where to machine with the database | 150 ms |

| 3.c | web host sees nothing and routes to local adama | 1 ms |

| 4.a | Adama doesn’t have the data, so it talks to database to bind it | 150 ms |

Roughly speaking, 310ms for a document to start processing.

Scenario: Document is warm in local region

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host looks up where to machine with the database | 150 ms |

| 3.c | web host sees a machine in region and routes to it | 1 ms |

| - | Adama has the data already and starts processing | 1 ms |

Warm data will be much faster at ~162ms.

Scenario: Document is warm in remote region

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web tier looks up where to machine with the database | 150 ms |

| 3.b | web tier sees document belongs in a remote remote and proxies to that region | 150 ms |

| 4.b | remote web host looks up where the machine is and see its within region | 150 ms |

| 5 | remote web host sends to remote adama | 1 ms |

| - | Adama has the data and sends it along | 1 ms |

An stupendous ~462ms for a remote region connect! It’s worth noting that we can’t have a cold document route to a different region since there is no mapping to that region, and this is an important property to maintain.

With latencies of various scenarios at 162ms, 310ms, and 462ms.. This can be improved. We are already abusing the fact that Adama knows if it has the document, and we will discuss in a future post the downsides of having a single machine responsible for an experience. However, we can observe that having web talk to the database for routing seems like a bad idea. Instead, maybe we should move it to Adama?

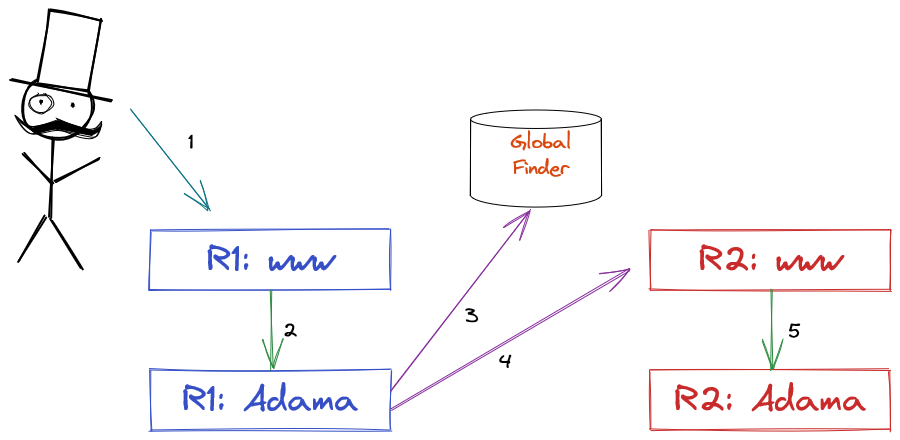

Move who decides to go cross region

The lines cleaning up help us assess the situation, so let’s redrive the scenarios

Scenario: Document is in cold storage

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host routes to the machine within region | 1 ms |

| 3. | Adama doesn’t have the data, so it talks to database to bind it | 150 ms |

We go from 310ms to 162ms! W00t!

Scenario: Document is warm in local region

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host routes to the machine within region | 1 ms |

| x | Adama has the data already and starts processing | 1 ms |

From 162ms to 12ms, and that’s the fun part.

Scenario: Document is warm in remote region

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host routes to the machine within region | 1 ms |

| 3 | Adama talks to database to learn of another region | 150 ms |

| 4 | Adama forwards to a remote web host | 150 ms |

| 5 | Web host forwards to appropriate machine | 1 ms |

From ~462ms to ~312ms is a good reduction. The above represents an almost ideal world, but we reality of the situation is more complex and this doesn’t take into consideration active replication of the finder table for read replicas. Furthermore, we have introduce an extra hop which reduces the total reliability of the stream.

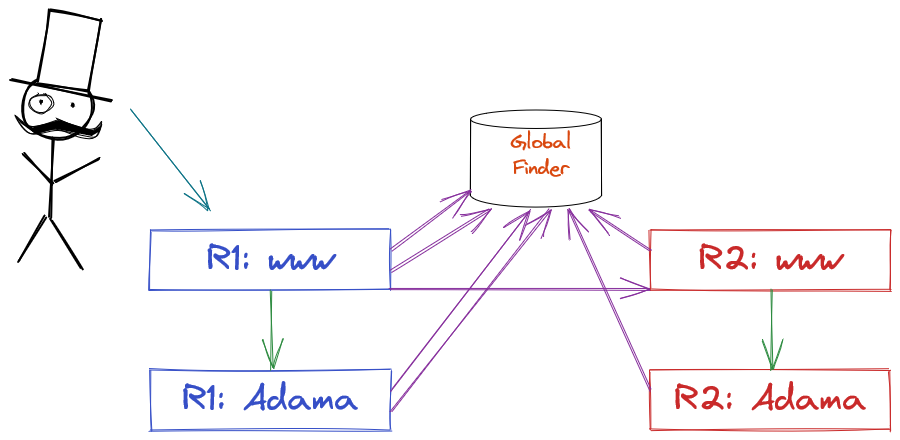

The Actual Mess

Reality is more complicated as the layers are a bit isolated from each other, and the finder is used in many places. This routing decision used to be cheap as it was in-region, so the finder is abused a bit with nearly no caching.

The abuse of the database is evident, and it’s also kind-of needed for one reason: error handling. Errors happen in two kinds. First, the ephemeral routing issues due to network and machine health. Second, load shedding due to capacity management. The second kind is what makes caching exceptionally hard because we don’t want an invalid routing to stick. We will introduce a third magical error which we will induce: route optimization. For route optimization, the first person to connect has an exceptional advantage over the placement of a document. For instance, if one player in India starts a game and three players in the US play then this is only optimal for one person. It may be a better experience to migrate the document to the centroid of all connected players. This requires leveraging the idioms created by capacity management to move the document.

My initial gut was to cache it within Adama or leverage regional database caches, but this manifests an exceptionally hard cache invalidation story. A beautiful way to resolve cache invalidation is to leverage a stream to the caching host providing read traffic from an authoritative host. Since I’m using MySQL, this fundamentally would require an in-region database read replica kept up to date.

This will improve the nastiness of the latency situation, but this seems silly as this introduces more disks and yet another thing to manage. This technique is useful in general, but I don’t like it for this scenario.

Alternatively, I considered using Adama as a cache using consistent hashing to front-load the database. The authoritative Adama would broadcast cache updates globally through web hosts. While this could work, it requires a lot of details to be right. For example, consistent hashing would require invalidating the cache when a document mapping is no longer invalid. It just becomes too much.

Instead, I think the best thing to do is to fix all the code such that the database lookups are not needed. Sadly, this requires details to come up the stack. For example, Caravan doesn’t store the document key on disk. Instead, it stores a document_id which is found only via finder. The key question to ask is: which machine should have the job of executing retry? Where retry happens is exceptionally important as it will determine which failures are terminal. First, the retry must happen on the client side because the first web host may fail. However, it would wasteful to expose all possible errors up to the client, so the next place is the web host. Only the first hop needs to perform retry for all the error mode shenanigans.

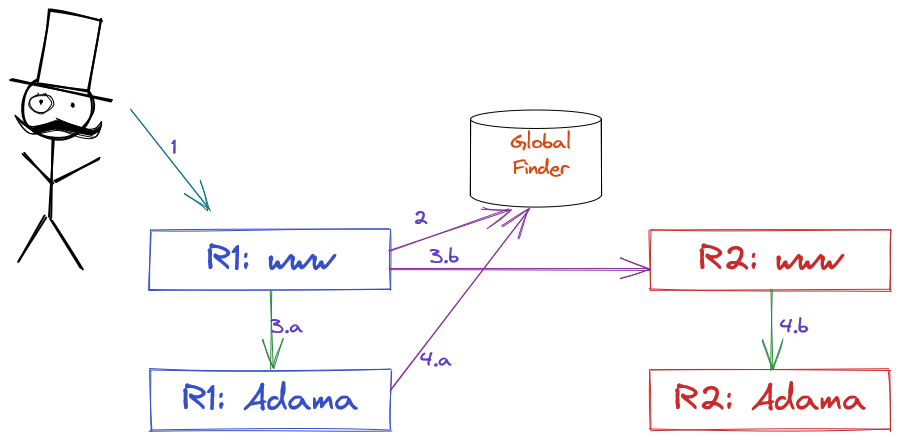

This is slightly better than the initial diagram, but this is an achievable place with the code over enough fixing.

Scenario: Document is in cold storage

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host looks up where to machine with the database | 150 ms |

| 3.a | web host sees nothing and routes to local adama | 1 ms |

| 4.a | Adama doesn’t have the data, so it talks to database to bind it | 150 ms |

Similar to the first model, we remain at 310ms.

Scenario: Document is warm in local region

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host looks up where to machine with the database | 150 ms |

| 3.a | web host sees a machine in region and routes to it | 1 ms |

| - | Adama has the data already and starts processing | 1 ms |

Dang it, we are still at ~162ms.

Scenario: Document is warm in remote region

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web tier looks up where to machine with the database | 150 ms |

| 3.b | web tier sees document belongs in a remote remote and proxies to that region with the appropriate routing record | 150 ms |

| 4.b | remote web host uses given routing record to route to Adama | 1 ms |

| x | Adama has the data and sends it along | 1 ms |

This achieves 312ms which is parity with the ideal world. However, we are not as good as we can be. We can optimize this one additional step by turning Adama into a finder proxy.

The web will now guess that the document is within the region and route to the appropriate host using consistent hashing rules. This creates a split brain where where the routing rule was correct or not. In the failure case, the behavior should mirror above. However, the correct case should be true for 99%+ of the time. This will lower the mean connect latency while maintaining a p99. This will primarily affect the Document is warm in local region scenario when the routing rules is correct

| step | what | cost |

|---|---|---|

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host guesses which Adama hosts; Adama has the data and returns appropriate record | 1 ms |

| 4.a | Adama has the data already and starts processing | 1 ms |

And we have a p90 of 12ms in this case. We can further lower the Document is in cold storage scenario if we introduce a new findbind operation to the finder which will need to use a stored procedure such that the proxy can attempt to take over the routing if it can. It will atomically find the record and optimistically bind it.

| 1. | person connects to their local friendly edge node | 10 ms |

| 2. | web host guesses which Adama hosts. Adama doesn’t have data and issues a findbind to databases; since the document was bound to this host, load the document for 30 seconds | 151 ms |

| 4.a | Adama has the data already and starts processing | 1 ms |

The cold scenario goes from ~310ms to ~162ms, and we have achieved the ideal at p90 with the error modes accounted for better. The next step is the hard step of bringing reality in-line with ideal.

Future

So, this is, effectively a design document for how I’m thinking of fixing my cross-region mess. I didn’t plan it out much in advance which is why I have a mess, but I believe I can fix it once I launch another region. The key is that I first must finish the mess and make it work. This starts the data train where I can measure connect latency end to end. From there, I really should go beyond unit and integration testing, and I need to embrace canaries and acceptance tests which run all the time. Fortunately, finishing cross region support will create the tools to go hog wild on acceptance testing. Once I have testing producing data, I can confidently work on reducing latency by improving the plumbing and reducing pressure on the database.